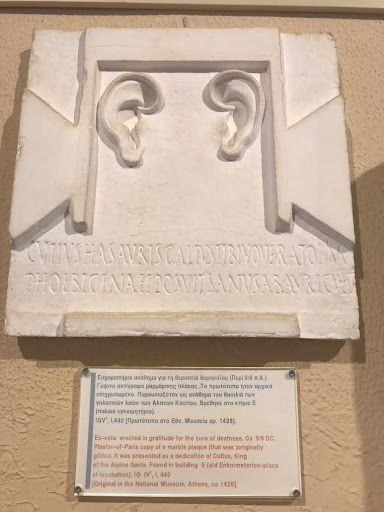

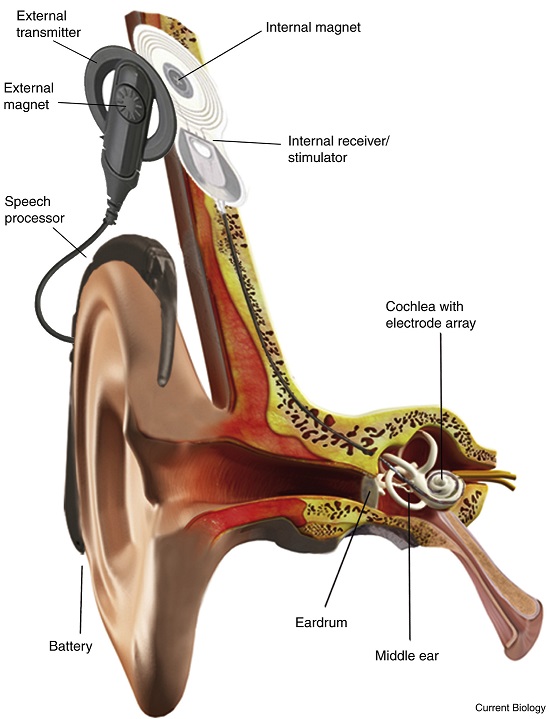

The hope of curing deafness is ancient. In the sanatorium of Epidaurus, a healing centre where the famous theatre of classical Greece is located, a votive stone asks the gods for the grace to cure deafness. In our times, this wish has been at least partially granted. What revolutionised the world of deafness in the 1980s was the cochlear implant, the so-called bionic ear. This electronic device partially hidden under the skin, where it is implanted with a small surgical procedure, converts sounds into electrical impulses and communicates them directly to the auditory nerve and thus to the brain. Implanted in young children and adults who become deaf, it has enabled many to recover their hearing and thus develop spoken language and reduce difficulties in communicating with people who do not know marked languages.

However, much remains to be understood about the relationship between auditory experience and language, how the latter develops if access to sounds occurs late. One study conducted by a research group at the IMT School that focuses precisely on studying the neural systems underlying language comprehension, is helping to clarify how the brains of children with cochlear implants process natural language, and to better understand how language develops in the early stages of life: how much the innate predisposition counts and how much the experience gained through hearing.

For the trial, conducted in collaboration with the paediatric hospitals Meyer in Florence and Burlo Garofolo in Trieste, and the universities of Milan Bicocca and Trento, almost one hundred children, aged between two and a half years and adolescence, were studied: deaf at birth, or who had become deaf after the first year of life and had received a cochlear implant, and a group of normal hearing peers. Their brain activity was recorded while listening to pieces of spoken language - fairy tales and stories.

"This is the first work in the world to make a direct measurement, via electroencephalogram, of how the brains of children who have received a cochlear implant learn to process and listen to natural language," explains Davide Bottari, researcher at the MoMiLab, responsible for SEED group and coordinator of the study. "The most innovative aspect of the study is precisely the method by which it was conducted. Generally, in order to understand how much the experience of hearing matters in language learning, we have analysed how the brain responds to small units of language - for example single syllables, a situation, however, that is very different from natural language, in which people with cochlear implants may find it difficult, especially when environmental noise is present. In our case, we used special electroencephalographic techniques that allow us to measure how the brain processes natural language while the child is listening to it'".

What normally happens is that, immediately after the arrival of a sound, our brain has a very rapid initial activity, within a few tens of milliseconds. In the study, the brain response in children with cochlear implants and hearing children was compared. For these 'basic' aspects of language, there is almost no difference in the response in the brain between children with implants compared to hearing children. Their brains were able to lock on to the 'rhythm' of speech, albeit with a delayed response, as if they were a little slower to represent the way speech sounds change over time. "In fact, for the most basic properties of speech processing, whether children heard or not in the first year of life made almost no difference, reflecting the belief that the brain has a very robust predisposition to speech sounds," notes Alessandra Federici, a post-doc in the lab and first name in the article. The later the child received the implant, however, the more this delay increases, confirming that, as other studies show, it is very important that these devices are implanted early.

However, with regard to more sophisticated aspects of speech processing, particularly those related to comprehension, the study showed some changes induced by the period of deafness preceding cochlear implantation. It is known that children learn to understand language much earlier than when they begin to speak: as early as six to seven months they are able to recognise certain words and understand their meaning. And before the age of one year, their hearing specialises in understanding the sounds of the mother tongue. For these reasons, one wonders how early deafness may affect the development of the brain systems that enable language processing. "The data from the study suggest that the brains of children with cochlear implants may process words less efficiently, so much so that children score lower than their hearing peers on tests of comprehension of fairy tales and stories they have heard," Federici says.

"Today, cochlear implants are being fitted to younger and younger children in cases of early deafness. However, the period of deafness seems to leave its mark. In addition, there is still inhomogeneity in the approaches between hospitals in terms of both the technique with which the implant is performed and the timing, which can vary between six and eighteen months of age," says Bottari. "The aim of the research, which will continue in collaboration with four hospitals (Meyer in Florence, Burlo Garofolo in Trieste, Bambin Gesù in Rome, Martini in Turin), is to understand both when is the best time to perform the implant, what are the best pre- and post-implantation rehabilitation procedures, and in general how the brain adapts to the new experience of hearing while also integrating it with the other senses, such as sight. A big thank you goes to the children and their families who made themselves available, without them the research would not have been possible".